Is Your Enterprise Ready to Rise with the Machines?

AI, machine learning bring surge in memory demand

Another industrial revolution is upon us, and it is being driven by a myriad of technologies generating an influx of data. At its core is artificial intelligence (AI) with machines learning to think like humans, and its increasing adoption is altering every facet of society.

What exactly is AI? TechTarget defines AI as "the simulation of human intelligence processes by machines, especially computer systems." These processes include learning, reasoning and self-correction.

AI is already prevalent today, though still probably not in the form often seen in movies. In your daily life, AI is being used to offer recommendations on what to buy online, to recognize biometric features to unlock phones, to understand and respond to questions to virtual assistants, and even filter spam emails, to name a few real-life applications.

According to research by IDC, global spending on cognitive and AI solutions will reach 54.4% compound annual growth rate (CAGR) through 2020. Top use cases include quality management investigation and recommendation systems, diagnosis and treatment systems, automated customer service agents, automated threat intelligence and prevention systems, and fraud analysis and investigation.

Machine Learning and the Algorithm Economy

The growth of AI is directly linked to the growth of the Internet of Things (IoT), especially in the area of automation, where more and more "things" are operating with little to no human intervention. Machine learning, a branch of AI, develops techniques allowing computers to learn. Deep learning platforms, a unique form of machine learning, uses artificial neural circuits that emulate how the human brain processes data and creates patterns for decision making.

By observing high volumes of complex data, algorithms "learn" to create better computing models to predict issues, solve problems, improve processes and increase efficiencies—basically, improve lives, societies and businesses.

Forbes lists the top 10 AI and machine learning use cases:

-

Data security – Predicting security breaches and reporting anomalies by observing and establishing patterns in how data in the cloud is accessed

-

Personal security – Identifying things that human screeners might miss during security checks in airports, stadiums, cinemas and other venues

-

Financial trading – Predicting and executing high-speed and high-volume trades

-

Health care – Understanding disease risk factors in large populations, and spotting patterns for early disease prevention

-

Marketing personalization – Helping companies better understand their customers to deliver better, more personalized products and services

-

Fraud detection – Spotting potential cases of fraud and distinguishing between legitimate and fraudulent transactions

-

Recommendations – Analyzing people's activities and preferences to determine what they might like to buy next, and offering purchase recommendations

-

Online search – Detecting patterns based on search queries to provide better search results

-

Natural language processing – Simulating the human ability to understand language and understanding meanings to overcome language barriers

-

Smart cars – Enabling self-driving cars that can understand its environment and make intelligent adjustments to ensure driver, passenger, pedestrian and infrastructure safety

Machine Learning Process

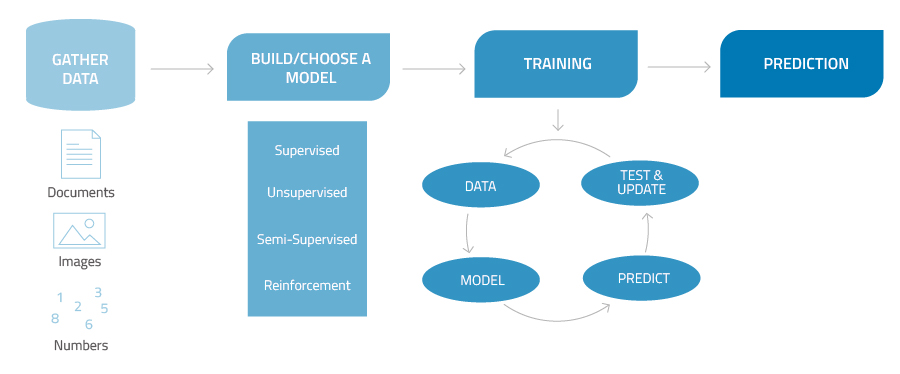

How does a machine learn to think like a human brain? There are essentially four steps, with several components in between.

-

Gather data from multiple valid sources. At the initial stage, there must be enough data to apply machine learning to solve a particular problem. Data is then prepared for machine training by organizing, discovering patterns, and identifying and analyzing relationships.

-

Build or choose a model or machine learning algorithm. Several models exist and the choice will depend largely on the type of machine learning task. There are four types:

Supervised learning refers to the task of inferring a function from training data, where input and output (desired outcome) are clearly labeled.

Unsupervised learning refers to the machine's ability to solve complex problems where training data is not labeled and only input data is used. In a classification problem, for example the machine will recognize objects with similar characteristics, cluster them together and assign its own label.

Semi-supervised learning uses a combination of both labeled and unlabeled data. This is particularly useful when reference data is incomplete or inaccurate. The machine will access reference data when available and fill in gaps with "guesses."

Reinforcement learning is an area of machine learning where there are no labeled or unlabeled data sets. The algorithm learns by interacting with its environment. If it performs correctly, it receives a reward; in incorrectly, it receives a penalty. Over time, the agent maximizes its reward and minimizes penalty using dynamic programming. -

Training. The bulk of machine learning happens at this stage, enabling the algorithm to improve its abilities. Actual algorithm output is then compared with the output it should produce, values are adjusted, and the process is repeated until it becomes adept. Evaluation then follows with the algorithm being tested using data that has not been previously used for training to see how it performs against data it has not seen yet. Parameters are then further tuned.

-

Prediction. At this stage, machine learning derives meaning from data and uses that data to answer questions or solve issues.

Figure 1. Simplified depiction of the machine learning process.

Data as Fuel

An important characteristic that sets this industrial revolution apart from previous ones is that it is fueled by data. Billions of devices from self-driving cars to smart factories, health care, intelligent homes and buildings, smart cities and more are generating staggering amounts of data.

The new breed of electric hypercars like Rimac C_Two, for example, features a state-of-the-art infotainment system, cameras and numerous sensors that can easily generate several terabytes of data per hour of driving. A typical offshore oil platform generates 1 TB to 2 TB of data per day, a commercial jet 40 TB per hour of flight, and mining operations 2.4 TB every minute (Source).

The influx of data to be stored, processed and retrieved continues to increase at insatiable rates and naturally, infrastructures are needed to handle the vast amounts of data. Going past the hype, one computing component that is critical to the unfolding of AI reality is MEMORY.

Memory Demand on the Rise

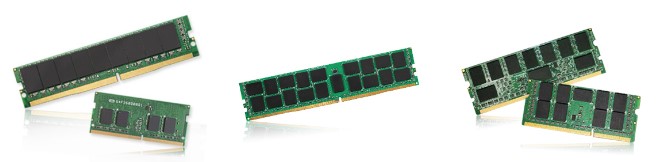

Dynamic Random Access Memory (DRAM) is an important part of data processing. The astounding amounts of data being generated is causing unparalleled DRAM demand in the cloud, in data center applications, on premise and at the edge. In an interview with The Register, Micron CEO Sanjay Mehrotra said," AI servers will require six times the amount of DRAM and twice the amount of SSDs compared with standard servers."

As AI workloads continue to grow, hyperscale data centers require more and more memory. In the first quarter of the year, DRAM supply remained tight mainly due to the massive construction projects of data centers, some of which are bigger than football fields. IDC defines hyperscale data centers as having "... a minimum of 5,000 servers and are at least 10,000 sq ft in size but generally much larger.” -IDC (2016)

While a great portion of AI workloads is headed for hyperscale data centers, growth in machine learning is no longer confined to them, but is permeating every industry as AI adoption accelerates. The big challenge for memory infrastructures is not only the sheer volume of data from machine and deep learning applications, but that data has to move, be processed and stored fast as well.

ATP DRAM Modules for AI Workloads

ATP Electronics meets the growing demand for higher capacity, speed and reliability of memory products for AI workloads with its industrial-grade DRAM portfolio. ATP DRAM modules address memory bandwidth requirements in diverse deployment scenarios. Accelerated computing is made possible with the latest DDR4-2666, with its increased interface speed amplifying theoretical peak performance by 15% over the previous generation. Critical computing applications get a boost in performance while maintaining low power consumption at a mere 1.2V.

Figure 2. ATP offers a full range of DRAM solutions for the every-growing memory requirements of artificial intelligence and machine learning workloads.

Available in different densities, speeds and form factors, ATP DRAM modules are constructed for heavy workloads and harsh operating conditions. The complete product line from SDRAM to DDR4 undergoes strict testing and validation from IC to system level, with major ICs sourced 100% from Tier 1 manufacturers. The thicker 30µ" gold finger plating ensures high-quality signal transmission, and select modules can operate at the industrial temperature range of -40°C to 85°C.

JEDEC compliance, 100% system-level testing, and guaranteed longevity support make these memory modules the ideal solution for high-performance computing requirements of AI workloads.

Visit the ATP DRAM products page for more information about the best memory solutions for your enterprise or industrial needs, or contact an ATP Representative to know how our DRAM modules can meet the exacting demands of your growing enterprise or industrial needs in the emerging age of artificial intelligence.