What is over-provisioning?

When a solid state drive (SSD) arrives from the factory, you might be surprised to see that the actual storage space is less than the total capacity. The part that you cannot see, access nor use is called "over-provisioned" space, which is reserved for performing various memory management functions. Over-provisioning is the standard industry practice of allocating a certain portion of the drive to controller management features.

Why is over-provisioning necessary?

Some users see over-provisioning as a waste of storage capacity, but by guaranteeing permanent free space that is inaccessible to the user, the drive controller can manage all data traffic and NAND maintenance tasks in the background more efficiently. This space makes sure that the drive is not completely filled up with data, so other controller tasks can run smoothly.

How much over-provisioned space is needed to ensure the SSD's best performance and endurance?

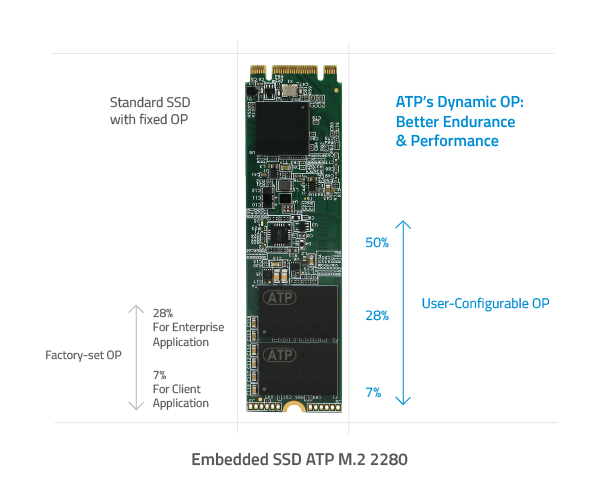

SSDs typically ship with factory-set 7% over-provisioned (OP) space for client applications or 28% for enterprise storage applications. As workloads vary, being stuck with a fixed over-provisioned space can cause problems, compromising performance and causing the drive to wear out faster. The best way to optimize OP should be determined by actual workloads of the intended application.

What causes SSDs to wear out?

SSDs can handle only a finite number of write cycles. Simply put, the more data you write to your SSD, the shorter its lifespan. SSDs use NAND flash memory, which is susceptible to wearing out due to repeated program and erase (P/E) cycles. Unlike traditional hard disk drives (HDDs) that free up space by overwriting old data with new data, SSDs write and erase data differently. Data may be written on pages, which are stacked on a block, but cannot be overwritten. To write new data, an entire block has to be erased first.

To clear out invalid data, SSDs employ two important processes called Garbage collection and Trim.

Garbage collection is the moving of valid data to other blocks so the original block can be erased for use.

Trim allows a host to inform the SSD which blocks of data are no longer in use and may be utilized by new data.

Repeated programming and erasing to the same memory location wears out that portion and eventually renders it invalid. This continuous cycle of relocating, rewriting, and erasing also contributes to the SSD's higher workload and leads to drive degradation.

How do you measure SSD performance and endurance?

Write amplification index (WAI)

WAI is the ratio of the total gigabytes written by the host to the NAND. A WAI value of 1 is ideal, as it means that 1 MB of data from the host is written as 1 MB on the SSD. As discussed above, SSD blocks must first be erased before they can be written to, so write-intensive operations require more extra writes, thus causing data written on the SSD to be amplified – it becomes greater than what the host had intended to write.

Terabytes written (TBW)

TBW is another way to gauge SSD endurance and performance. TBW refers to the total amount of data that can be written onto a storage device before it is bound to fail. A higher TBW means longer SSD usage life.

How can I decrease WAI for better SSD performance and increase TBW for longer life span?

Write-intensive applications naturally increase WAI and decrease TBW. In order to decrease WAI and increase the life span of the SSD with higher TBW, a higher OP (less usable storage) should be implemented to ensure stability, improve performance and extend the SSD usage.

Read-intensive applications, on the other hand, require less endurance, so high WAI and SSD degradation are not much of a concern; thus, a lower OP (higher usable storage) may be implemented.

How can ATP's Dynamic Over-Provisioning solution enhance the performance and extend the usage life of my SSD?

Most SSDs in the market come with a fixed OP percentage, giving no flexibility for users to evaluate workloads and optimally configure SSDs for the best-possible performance and endurance.

The ATP Dynamic Over-Provisioning solution ATP gives users the freedom to configure SSDs according to the actual workloads of specific applications to optimize performance, endurance and cost.

Different OP percentages can affect SSD performance and endurance. Testing done by ATP engineers shows that enterprises with write-intensive workloads can greatly benefit from high OP percentage.

In random write performance testing, a low 7% OP showed around 90% drop in 4K random write speed after prolonged testing. Setting a higher OP percentage may lead to better, more consistent performance compared with a lower OP percentage.

OP percentage is inversely proportional to WAI, so a higher OP percentage helps reduce WAI and improves performance. On the other hand, OP percentage is directly proportional to TWB, so a higher OP percentage demonstrates better endurance.

How can ATP's Dynamic Over-Provisioning solution optimize cost?

SSDs with lower OP percentage offer more capacity for users, making it appear that costs per gigabyte are lower. In reality however, the higher cost will be felt in the long term. A lower OP percentage, although offering more capacity, leads to faster SSD degradation and more frequent drive replacements. High OP settings are for users who are willing to trade off capacity for extra performance and endurance.

By enabling users to configure the OP setting based on actual needs, the ATP Dynamic Over-Provisioning solution maximizes the performance and life span of the SSD, translating to greater productivity and savings in the long term. For more information on Dynamic Over-Provisioning solution, visit the ATP website or contact an ATP Distributor/Representative in your area.